COVID-19 vaccine tweet sentiment analysis with fastai - part 1

This is part one of a two-part NLP series where we carry out sentiment analysis on COVID-19 vaccine tweets. In this part we follow the ULMFiT approach with fastai to create a Twitter language model, then use this to fine-tune a tweet sentiment classification model.

- Introduction

- Transfer learning in NLP - the ULMFiT approach

- Data preparation

- Training a language model

- Training a sentiment classifier

- Classifying unlabelled tweets

- Conclusion

Introduction

In this post we will create a model to perform sentiment analysis on tweets about COVID-19 vaccines using the fastai library. I will provide a brief overview of the process here, but a much more in-depth explanation of NLP with fastai can be found in lesson 8 of the fastai course. In part 2 we will use the model for analysis, looking at changes in tweet sentiment over time and how that relates to the progress of vaccination in different countries.

Transfer learning in NLP - the ULMFiT approach

We will be making use of transfer learning to help us create a model to analyse tweet sentiment. The idea behind transfer learning is that neural networks learn information that generalises to new problems, particularly the early layers of the network. In computer vision, for example, we can take a model that was trained on the ImageNet dataset to recognise different features of images such as circles, then apply that to a smaller dataset and fine-tune the model to be more suited to a specific task (e.g. classifying images as cats or dogs). This technique allows us to train neural networks much faster and with far less data than we would otherwise need.

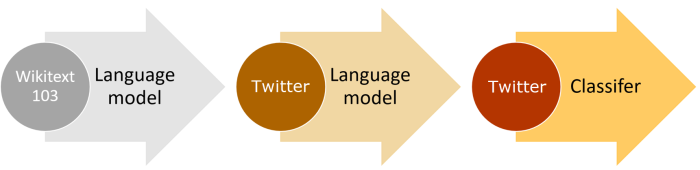

In 2018 a paper introduced a transfer learning technique for NLP called 'Universal Language Model Fine-Tuning' (ULMFiT). The approach is as follows:

- Train a language model to predict the next word in a sentence. This step is already done for us; with

fastaiwe can download a model that has been pre-trained for this task on millions of Wikipedia articles. A good language model already knows a lot about how language works in general - for instance, given the sentence 'Tokyo is the capital of', the model might predict 'Japan' as the next word. In this case the model understands that Tokyo is closely related to Japan and that 'capital' refers to 'city' here instead of 'upper-case' or 'money'. - Fine-tune the language model to a more specific task. The pre-trained language model is good at understanding Wikipedia English, but Twitter English is a bit different. We can take the information the Wikipedia model has learned and apply that to a Twitter dataset to get a Twitter language model that is good at predicting the next word in a tweet.

- Fine-tune a classification model to identify sentiment using the pre-trained language model. The idea here is that since our language model already knows a lot about Twitter English, it's not a huge leap from there to train a classifier that understands that 'love' refers to positive sentiment and 'hate' refers to negative sentiment. If we tried to train a classifier without using a pre-trained model it would have to learn the whole language from scratch first, which would be very difficult and time consuming.

This notebook will walk through steps 2 and 3 with fastai. We will then apply the model to unlabelled COVID-19 vaccine tweets and save the results for analysis in part 2.

fastai, but fortunately for us Google Colab provides us with access to one for free! To use it, select ’Runtime’ from the menu at the top of the notebook, then ’Change runtime type’, and ensure your hardware accelerator is set to ’GPU’ before continuing!

Data preparation

This is a write-up of a submission I made for several Kaggle tasks. The tasks are still open and accepting new entries at the time of writing if you want to enter as well! On Kaggle the data is already readily available when using their notebook servers; however, we are using Google Colab today, so we will need to access the Kaggle API to download the data.

Getting the data from Kaggle

The first step is to create an API token. To do this, the steps are as follows:

- Go to 'Account' on Kaggle and scroll down to the 'API' section.

- Expire all current API tokens by clicking 'Expire API Token'.

- Click 'Create New API Token', which will automatically download a file called

kaggle.json. - Upload the

kaggle.jsonfile using the file uploader widget below.

# See https://neptune.ai/blog/google-colab-dealing-with-files for more tips on working with files in Colab

from google.colab import files

uploaded = files.upload()

Next, we need to install the Kaggle API.

!pip uninstall -q -y kaggle

!pip install -q --upgrade pip

!pip install -q --upgrade kaggle

The API docs tell us that we need to ensure kaggle.json is in the location ~/.kaggle/kaggle.json, so let's make the directory and move the file.

# https://www.machinelearningmindset.com/kaggle-dataset-in-google-colab/

!mkdir -p ~/.kaggle

!cp kaggle.json ~/.kaggle/

# Check the file in its new directory

!ls /root/.kaggle/

# Check the file permission

!ls -l ~/.kaggle/kaggle.json

#Change the file permission

# chmod 600 file – owner can read and write

# chmod 700 file – owner can read, write and execute

!chmod 600 ~/.kaggle/kaggle.json

Now we can download the data using !kaggle dataset download -d username-of-dataset-creator/name-of-dataset.

# We will be using two datasets for this part, as well as a third dataset for part 2

# To save time in part 2 I'm going to download them all now and save locally

!kaggle datasets download -d gpreda/all-covid19-vaccines-tweets

!kaggle datasets download -d maxjon/complete-tweet-sentiment-extraction-data

!kaggle datasets download -d gpreda/covid-world-vaccination-progress

The files will be downloaded in .zip format, so let's unzip them.

# To unzip you can use the following:

#!mkdir folder_name

#!unzip anyfile.zip -d folder_name

# Or unzip all

!unzip -q \*.zip

! [ -e /content ] && pip install -Uqq fastai # upgrade fastai on colab

import fastai; fastai.__version__

Let's import fastai's text module and take a look at our data.

import *, useful libraries like pandas and numpy will also be imported at the same time!

from fastai.text.all import *

vax_tweets = pd.read_csv('vaccination_all_tweets.csv')

vax_tweets[['date', 'text', 'hashtags', 'user_followers']].head()

We could use the text column of this dataset to train a Twitter language model, but since our end goal is sentiment analysis we will need to find another dataset that also contains sentiment labels to train our classifier. Let's use 'Complete Tweet Sentiment Extraction Data', which contains 40,000 tweets labelled as either negative, neutral or positive sentiment. For more accurate results you could use the 'sentiment140' dataset instead, which contains 1.6m tweets labelled as either positive or negative.

tweets = pd.read_csv('tweet_dataset.csv')

tweets[['old_text', 'new_sentiment']].head()

For our language model, the only input we need is the tweet text. As we will see in a moment fastai can handle text preprocessing and tokenization for us, but it might be a good idea to remove things like twitter handles, urls, hashtags and emojis first. You could experiment with leaving these in for your own models and see how it affects the results. There are also some rows with blank tweets which need to be removed.

We ideally want the language model to learn not just about tweet language, but more specifically about vaccine tweet language. We can therefore use text from both datasets as input for the language model. For the classification model we need to remove all rows with missing sentiment, however.

def de_emojify(inputString):

return inputString.encode('ascii', 'ignore').decode('ascii')

# Code via https://www.kaggle.com/pawanbhandarkar/generate-smarter-word-clouds-with-log-likelihood

def tweet_proc(df, text_col='text'):

df['orig_text'] = df[text_col]

# Remove twitter handles

df[text_col] = df[text_col].apply(lambda x:re.sub('@[^\s]+','',x))

# Remove URLs

df[text_col] = df[text_col].apply(lambda x:re.sub(r"http\S+", "", x))

# Remove emojis

df[text_col] = df[text_col].apply(de_emojify)

# Remove hashtags

df[text_col] = df[text_col].apply(lambda x:re.sub(r'\B#\S+','',x))

return df[df[text_col]!='']

# Clean the text data and combine the dfs

tweets = tweets[['old_text', 'new_sentiment']].rename(columns={'old_text':'text', 'new_sentiment':'sentiment'})

vax_tweets['sentiment'] = np.nan

tweets = tweet_proc(tweets)

vax_tweets = tweet_proc(vax_tweets)

df_lm = tweets[['text', 'sentiment']].append(vax_tweets[['text', 'sentiment']])

df_clas = df_lm.dropna(subset=['sentiment'])

print(len(df_lm), len(df_clas))

df_clas.head()

Training a language model

To train our language model we can use self-supervised learning; we just need to give the model some text as an independent variable and fastai will automatically preprocess it and create a dependent variable for us. We can do this in one line of code using the DataLoaders class, which converts our input data into a DataLoader object that can be used as an input to a fastai Learner.

dls_lm = TextDataLoaders.from_df(df_lm, text_col='text', is_lm=True, valid_pct=0.1)

Here we told fastai that we are working with text data, which is contained in the text column of a pandas DataFrame called df_lm. We set is_lm=True since we want to train a language model, so fastai needs to label the input data for us. Finally, we told fastai to hold out a random 10% of our data for a validation set using valid_pct=0.1.

Let's take a look at the first two rows of the DataLoader using show_batch.

dls_lm.show_batch(max_n=2)

We have a new column, text_, which is text offset by one. This is the dependent variable fastai created for us. By default fastai uses word tokenization, which splits the text on spaces and punctuation marks and breaks up words like can't into two separate tokens. fastai also has some special tokens starting with 'xx' that are designed to make things easier for the model; for example xxmaj indicates that the next word begins with a capital letter and xxunk represents an unknown word that doesn't appear in the vocabulary very often. You could experiment with subword tokenization instead, which will split the text on commonly occuring groups of letters instead of spaces. This might help if you wanted to leave hashtags in since they often contain multiple words joined together with no spaces, e.g. #CovidVaccine. The fastai tokenization process is explained in much more detail here for those interested.

learn = language_model_learner(dls_lm, AWD_LSTM, drop_mult=0.3,

metrics=[accuracy, Perplexity()]).to_fp16()

Here we passed language_model_learner our DataLoaders, dls_lm, and the pre-trained RNN model, AWD_LSTM, which is built into fastai. drop_mult is a multiplier applied to all dropouts in the AWD_LSTM model to reduce overfitting. For example, by default fastai's AWD_LSTM applies EmbeddingDropout with 10% probability (at the time of writing), but we told fastai that we want to reduce that to 3%. The metrics we want to track are perplexity, which is the exponential of the loss (in this case cross entropy loss), and accuracy, which tells us how often our model predicts the next word correctly. We can also train with fp16 to use less memory and speed up the training process.

We can find a good learning rate for training using lr_find and use that to fit our model.

learn.lr_find()

When we created our Learner the embeddings from the pre-trained AWD_LSTM model were merged with random embeddings added for words that weren't in the vocabulary. The pre-trained layers were also automatically frozen for us. Using fit_one_cycle with our Learner will train only the new random embeddings (i.e. words that are in our Twitter vocab but not the Wikipedia vocab) in the last layer of the neural network.

learn.fit_one_cycle(1, 3e-2)

After one epoch our language model is predicting the next word in a tweet around 25% of the time - not too bad! We can unfreeze the entire model, find a more suitable learning rate and train for a few more epochs to improve the accuracy further.

learn.unfreeze()

learn.lr_find()

learn.fit_one_cycle(4, 1e-3)

After a bit more training we can predict the next word in a tweet around 29% of the time. Let's test the model out by using it to write some random tweets (in this case it will generate some text following 'I love').

TEXT = "I love"

N_WORDS = 30

N_SENTENCES = 2

print("\n".join(learn.predict(TEXT, N_WORDS, temperature=0.75) for _ in range(N_SENTENCES)))

Some interesting results there! Let's save the model encoder so we can use it to fine-tune our classifier. The encoder is all of the model except for the final layer, which converts activations to probabilities of picking each token in the vocabulary. We want to keep the knowledge the model has learned about tweet language but we won't be using our classifier to predict the next word in a sentence, so we won't need the final layer any more.

learn.save_encoder('finetuned_lm')

dls_clas = DataBlock(

blocks = (TextBlock.from_df('text', seq_len=dls_lm.seq_len, vocab=dls_lm.vocab),

CategoryBlock),

get_x=ColReader('text'),

get_y=ColReader('sentiment'),

splitter=RandomSplitter()

).dataloaders(df_clas, bs=64)

To use the API, fastai needs the following:

-

blocks:-

TextBlock: Our x variable will be text contained in apandasDataFrame. We want to use the same sequence length and vocab as the language modelDataLoadersso we can make use of our pre-trained model. -

CategoryBlock: Our y variable will be a single-label category (negative, neutral or positive sentiment).

-

-

get_x,get_y: Get data for the model by reading thetextandsentimentcolumns from theDataFrame. -

splitter: We will useRandomSplitter()to randomly split the data into a training set (80% by default) and a validation set (20%). -

dataloaders: Builds theDataLoadersusing theDataBlocktemplate we just defined, the df_clasDataFrameand a batch size of 64.

We can call show batch as before; this time the dependent variable is sentiment.

dls_clas.show_batch(max_n=2)

Initialising the Learner is similar to before, but in this case we want a text_classifier_learner.

learn = text_classifier_learner(dls_clas, AWD_LSTM, drop_mult=0.5, metrics=accuracy).to_fp16()

Finally, we want to load the encoder from the language model we trained earlier, so our classifier uses pre-trained weights.

learn = learn.load_encoder('finetuned_lm')

learn.fit_one_cycle(1, 3e-2)

Now freeze all but the last two layers:

learn.freeze_to(-2)

learn.fit_one_cycle(1, slice(1e-2/(2.6**4),1e-2))

Now all but the last three:

learn.freeze_to(-3)

learn.fit_one_cycle(1, slice(5e-3/(2.6**4),5e-3))

Finally, let's unfreeze the entire model and train a bit more:

learn.unfreeze()

learn.fit_one_cycle(3, slice(1e-3/(2.6**4),1e-3))

learn.save('classifier')

Our model correctly predicts sentiment around 77% of the time. We could perhaps do better with a larger dataset as mentioned earlier, or different model hyperparameters. It might be worth experimenting with this yourself to see if you can improve the accuracy.

We can quickly sense check the model by calling predict, which returns the predicted sentiment, the index of the prediction and predicted probabilities for negative, neutral and positive sentiment.

learn.predict("I love")

learn.predict("I hate")

pred_dl = dls_clas.test_dl(vax_tweets['text'])

We can then make predictions using get_preds:

preds = learn.get_preds(dl=pred_dl)

Finally, we can save the results for analysis later.

vax_tweets['sentiment'] = preds[0].argmax(dim=-1)

vax_tweets['sentiment'] = vax_tweets['sentiment'].map({0:'negative', 1:'neutral', 2:'positive'})

# Convert dates

vax_tweets['date'] = pd.to_datetime(vax_tweets['date'], errors='coerce').dt.date

# Save to csv

vax_tweets.to_csv('vax_tweets_inc_sentiment.csv')

Conclusion

fastai make NLP really easy, and we were able to get quite good results with a limited dataset and not a lot of training time by using the ULMFiT approach. To summarise, the steps are:

- Fine-tune a language model to predict the next word in a tweet, using a model pre-trained on Wikipedia.

- Fine-tune a classification model to predict tweet sentiment using the pre-trained language model.

- Apply the classifier to unlabelled tweets to analyse sentiment.

In part 2 we will use our new model for analysis, investigating the overall sentiment of each vaccine, how sentiment changes over time and the relationship between sentiment and vaccination progress in different countries.

I hope you found this useful, and thanks very much to Gabriel Preda for providing the data!